GIFT Songan Zhang's Team Publishes Review Paper on Autonomous Driving 3D Reconstruction Technology in Top Intelligent Transportation Journal

A team of the Innovation Center for Intelligent Connected Electric Vehicles, Shanghai Jiao Tong University, has published a review paper titled "Learning-based 3D Reconstruction in Autonomous Driving: A Comprehensive Survey" in IEEE Transactions on Intelligent Transportation Systems (T-ITS), a top-tier journal in the field of intelligent transportation. The paper explores the evolution and application of learning-based 3D reconstruction technology in autonomous driving scenarios, offering a systematic technical roadmap and a future outlook for addressing the long-tail data problem and enabling high-fidelity closed-loop simulation. The first author of the paper is Liewen Liao, a 2024 Ph.D. candidate at the Global Institute of Future Technology, and the corresponding author is Assistant Professor Songan Zhang.

Research Background

As autonomous driving technology advances towards L4/L5 levels, the demands for environmental perception and system robustness are increasingly stringent. Reliable autonomous driving, which intrinsically depends on accurate perception and a holistic understanding of the 3D environment, requires vast amounts of high-quality, multimodal data (e.g., images and LiDAR) covering a wide range of extreme scenarios. However, in the real world, acquiring such large-scale data is not only prohibitive but also poses significant safety risks, especially in rare or emergent situations.

To overcome this data bottleneck, "digital twin" and simulation technologies have become critical pathways. By constructing high-fidelity digital replicas of the physical world, it is possible to generate vast amounts of training data and conduct algorithm testing in virtual environments at low cost and zero risk. Conventional reconstruction methods (e.g., photogrammetry and SFM) often face limitations when dealing with complex lighting, textureless areas, and dynamic traffic participants. In contrast, learning-based 3D reconstruction technology, leveraging neural networks’ capacity to model scenes implicitly or explicitly, offers a breakthrough solution for creating driving scenes with both photorealistic quality and geometric accuracy. It has emerged as an essential infrastructure technology in the autonomous driving technology stack.

Current Research

Over the past few years, the field of 3D reconstruction has undergone a paradigm shift from traditional geometric methods to deep learning approaches. The introduction of Neural Radiance Fields (NeRF) in 2020 marked a significant turning point, demonstrating that neural scene representations could achieve unprecedented rendering realism. Then, in 2023, the rise of 3D Gaussian Splatting (3DGS) technology introduced an explicit representation using 3D Gaussian primitives. While maintaining high fidelity, 3DGS compensates for NeRF's slow inference speed through efficient rasterization, enabling real-time rendering.

Despite exponential growth in related research, existing reviews are often limited to a single technical approach (e.g., NeRF or 3DGS) or are detached from the specific context of autonomous driving (e.g., mainly focusing on indoor small-object reconstruction). Autonomous driving scenarios have unique complexities: they involve large-scale unbounded environments, extremely sparse sensor viewpoints, and numerous high-speed moving rigid or non-rigid traffic participants. The current literature lacks a systematic study that starts from the genuine needs of autonomous driving, covers reconstruction from static to dynamic backgrounds, and extends to downstream applications (e.g., perception enhancement, closed-loop simulation, and world models). The industry urgently needs a comprehensive guide that bridges cutting-edge graphics technology with autonomous driving engineering practice.

Research Results

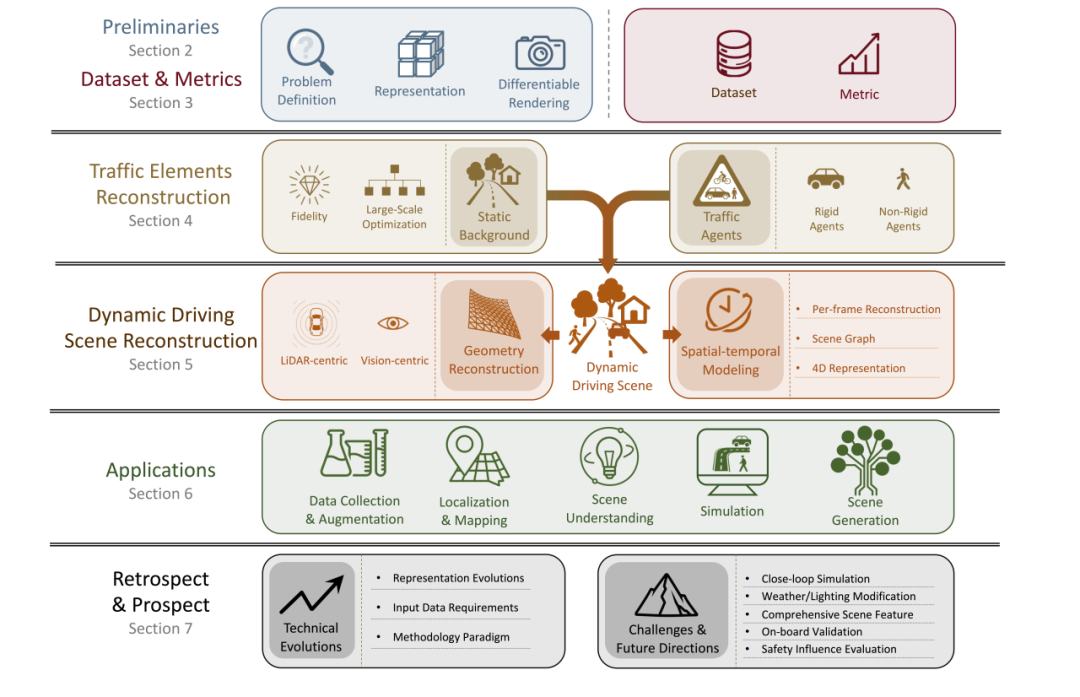

This paper provides a comprehensive and systematic review of learning-based 3D reconstruction technologies in the field of autonomous driving. Moving beyond only a listing of algorithms, the article constructs a novel taxonomy based on the practical challenges and technical requirements of autonomous driving:

· Hierarchical Analysis of Scene Element Reconstruction: The paper first delves into the challenges of balancing geometric fidelity with large-scale scene optimization in static background reconstruction. It then provides a detailed analysis of traffic participant reconstruction, differentiating between modeling strategies for rigid objects (e.g., vehicles) and non-rigid objects (e.g., pedestrians, cyclists), with particular focus on reconstruction techniques for human pose deformation and motion.

· Spatial-Temporal Modeling of Dynamic Driving Scenes: The paper summarizes three major paradigms for spatial-temporal modeling of dynamic scenes from an ego-vehicle perspective: per-frame reconstruction, scene graph, and 4D representations. Among these, native representation methods based on 4D Gaussians are the most promising approach for automatic static-dynamic decoupling without costly 3D bounding box annotations.

· Application-Driven In-Depth Analysis: The paper explains how 3D reconstruction technology enables core autonomous driving tasks. This includes data augmentation and multi-modal label generation (e.g., automatic generation of depth maps, semantic segmentation, optical flow), localization and mapping (SLAM), scene understanding (as a unified scene feature representation), and scene generation and simulation.

· Challenges and Prospects: The paper not only summarizes the status quo of technology but also identifies critical issues often overlooked in current research, including weather/lighting editing, the computational capacity of on-board deployment, and, most crucially, the quantitative assessment of the impact of generated data on autonomous driving safety. Finally, the paper explores future trends in combining world models and generative AI to achieve controllable scene generation and interactive closed-loop simulation.

Paper Link:

https://ieeexplore.ieee.org/document/11296945

Author Profile

Liewen Liao

2024 Ph.D. student at the Global Institute of Future Technology, SJTU. Research areas: 3D reconstruction, 3D generation, and closed-loop simulation in autonomous driving.

Corresponding Author Profile

Songan Zhang

Tenure-Track Assistant Professor at the Global Institute of Future Technology, SJTU, and a member of the Innovation Center of Intelligent Connected Electric Vehicles. Prof. Zhang received the B.S. and M.S. degrees in automotive engineering from Tsinghua University in 2013 and 2016, respectively, and the Ph.D. degree in mechanical engineering from the University of Michigan, USA, in 2021, under the supervision of Prof. Huei Peng, Director of Mcity. Upon graduation, she joined Ford Motor Company as a Researcher and concurrently served as the Committee Chair for the Robotics Proposal Review Panel of the Ford-University of Michigan Joint Program. She has published over 30 papers in journals and conferences, including T-ITS, T-IV, CVPR, and ICCV. Research areas: Decision-making and control algorithms for intelligent vehicles and robotics, reinforcement learning, meta-reinforcement learning, industrial embodied intelligence, and AI-assisted aircraft engine design.