GIFT Team Publishes Paper at IROS, a Premier Flagship Conference on Robotics

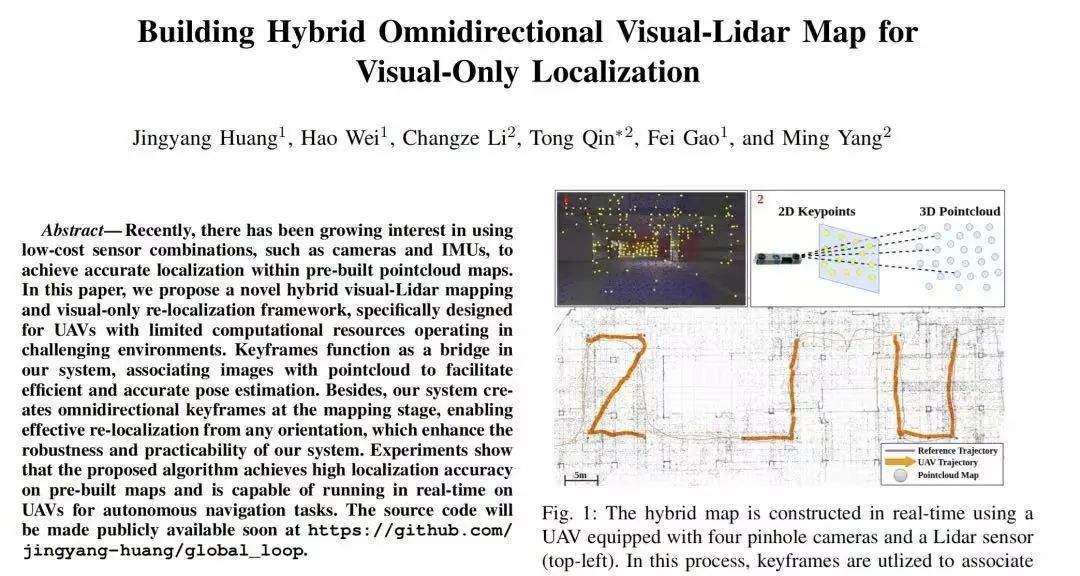

Innovation Center of Intelligent Connected Electric Vehicles at Shanghai Jiao Tong University, under the guidance of Professors Qin Tong and Yang Ming, has published a research paper titled "Building Hybrid Omnidirectional Visual-Lidar Map for Visual-Only Localization" at the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), a premier flagship conference in robotics. The paper proposes a systematic solution for vision-only robot localization in challenging environments by constructing a hybrid prior map with both texture and geometric information using visual and LiDAR sensors. The first author is Huang Jingyang, a visiting student at GIFT.

Research Background

In recent years, vision-based localization has played a crucial role in robotics and autonomous driving. However, traditional methods such as SLAM (Simultaneous Localization and Mapping) and SfM (Structure from Motion) suffer from inaccurate depth estimation, high computational costs and poor adaptability, particularly in texture-sparse or dynamic scenes where monocular or stereo vision systems face limitations in accuracy and robustness. While LiDAR provides precise 3D structural data, pure LiDAR localization relies on expensive high-precision sensors and struggles to integrate seamlessly with visual data. Existing cross-modal localization methods primarily depend on geometric alignment (e.g., ICP) or deep learning, but the former is sensitive to point cloud quality, and the latter incurs heavy computational overhead, making real-time operation on resource-constrained platforms (e.g., drones) difficult. Thus, achieving efficient and high-precision cross-modal localization with low-cost sensor setups (e.g., camera + IMU) remains a critical challenge.

Research Results

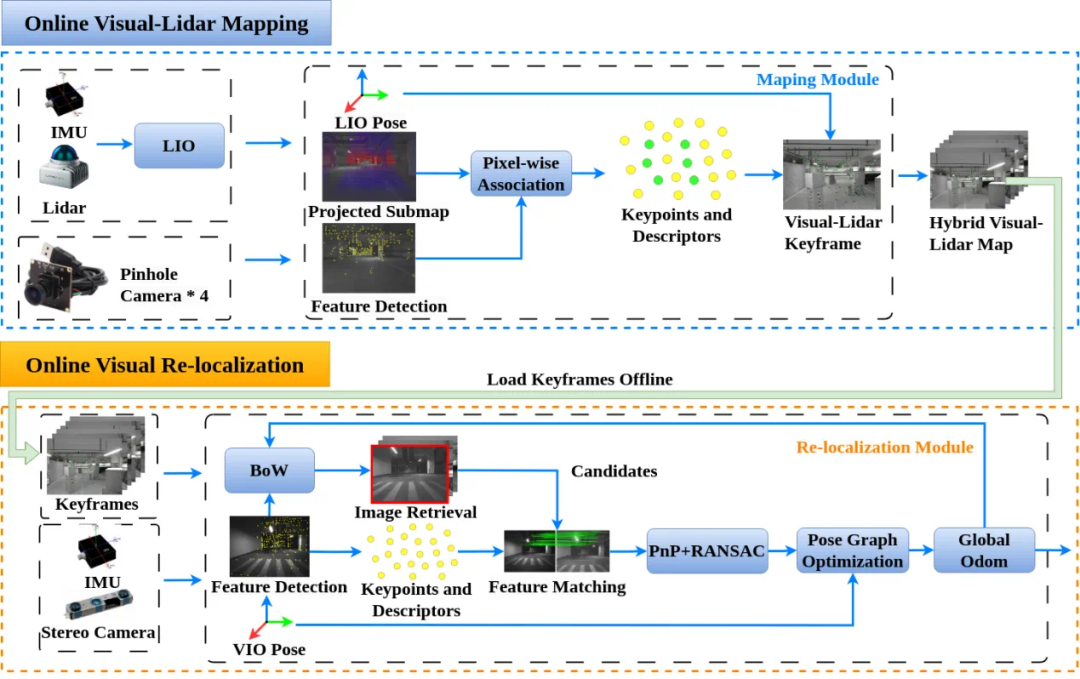

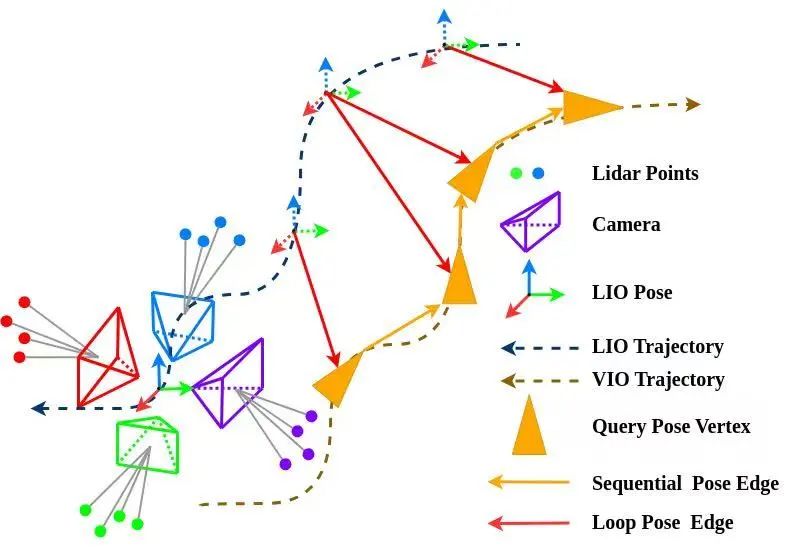

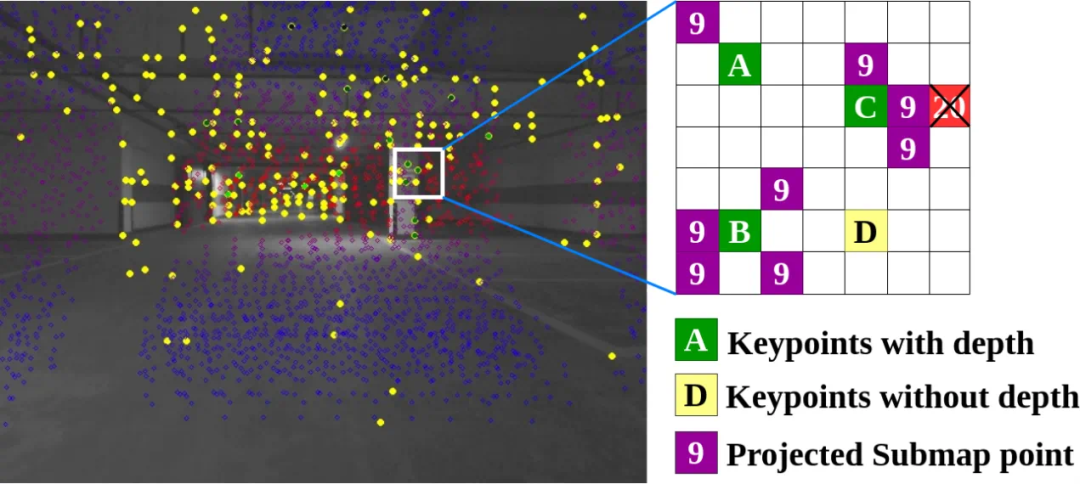

To address the challenges above, the paper introduces a hybrid visual-LiDAR keyframe mapping and vision-only relocalization framework tailored for computationally constrained drones. During the mapping phase, the system fuses images from a quad-camera setup with LiDAR data to construct an omnidirectional keyframe map, accurately associating visual features with LiDAR point cloud depths. This resolves the scale ambiguity and depth errors inherent in traditional visual mapping. In the relocalization phase, the system retrieves similar keyframes based on visual features, establishes 2D-2D matches and leverages the keyframe-associated 3D point clouds to form 2D-3D correspondences. The final pose is computed using PnP-RANSAC and pose graph optimization.

Framework diagram of the online mapping/localization algorithm

Key innovations of the framework include:

1)Omnidirectional Keyframe Design: A multi-camera system generates 360°keyframes, enabling fast relocalization from arbitrary viewpoints and enhancing environmental adaptability.

2)Lightweight Cross-Modal Fusion: Keyframes serve as a bridge to efficiently associate visual and LiDAR data, avoiding the high computational costs of traditional ICP methods.

3)Real-Time Optimization: The algorithm runs in real-time on embedded devices, making it suitable for autonomous drone navigation. Experimental results show that the system approximates LiDAR-level localization accuracy in complex environments while meeting real-time computational demands.

Multi-directional node pose graph optimization

Point cloud-pixel association method

Author Information

Huang Jingyang, visiting student at GIobal Institute of Future Technology, SJTU. Research interests: Cross-modal robot localization systems.

Qin Tong, Associate Professor at Global Institute of Future Technology, SJTU. Prof Qin earned his PhD degree from the Department of Electronic and Computer Engineering at the Hong Kong University of Science and Technology and previously worked at Intelligent Automotive Solution BU of Huawei. Qin was selected as one of Huawei's "Genius Youth." During his tenure as a perception and SLAM expert at Huawei, he contributed to the development of the Huawei ADS autonomous driving system, delivering lead-edge solutions that have been commercially deployed in multiple vehicle models. In recent years, he has published over ten high-quality papers as first/corresponding author in top-tier robotics journals and conferences such as TRO, JFR, RAL and ICRA. He received the IROS 2018 Best Student Paper Award and a TRO Best Paper Nomination Award. Research interests: Autonomous driving perception, mapping and localization; end-to-end AI large models; mobile robot SLAM.

Yang Ming, Distinguished Professor at the School of Sensing Science and Engineering, SJTU, PhD supervisor, and Director of the Intelligent Centre of Intelligent Connected Electric Vehicles. He is a leading talent in the National "Ten Thousand Talents Plan" for scientific and technological innovation. Currently, he serves as Deputy Director of the Intelligent Vehicle Committee and the Education Committee of the Chinese Association of Automation, Council Member of the Chinese Association for Artificial Intelligence, Deputy Director of Intelligent Robotics Committee and Associate Editor of IEEE IROS-CPRB. He is also an editorial board member for IEEE Transactions on Intelligent Vehicles and IEEE Transactions on Intelligent Transportation Systems. With long-standing expertise in unmanned vehicles and intelligent robotics, Prof Yang has published over 200 academic papers and holds more than 50 authorized national patents. His students have won outstanding paper awards at top-tier international conferences such as IEEE Intelligent Vehicle Symposium and the China Conference on Intelligent Robotics. He has received the Shanghai Technology Invention First Prize, Shanghai Teaching Achievement First Prize and the Ministry of Education Technology Invention Award, among other accolades. Research interests: Low-speed unmanned driving systems.